Case Study - Senyas: Revolutionizing Filipino Sign Language Recognition

Developed a cutting-edge Filipino Sign Language (FSL) recognition system using computer vision and deep learning techniques for real-time translation from video to text.

- Client

- Open Source

- Year

- Service

- AI and Computer Vision Development

Overview

In response to the communication challenges faced by the deaf community in the Philippines, Senyas was developed as an advanced Filipino Sign Language (FSL) recognition system. This project aimed to bridge the gap between FSL users and those unfamiliar with sign language by leveraging cutting-edge computer vision and deep learning technologies.

Senyas is open-source! View on GitHub

The Senyas Advantage

Senyas stands out as a powerful tool for FSL recognition, offering:

-

Advanced AI Model: Utilizes a CNN-LSTM hybrid architecture for accurate recognition of both static and dynamic FSL gestures.

-

Real-time Processing: Capable of processing live video input and providing instant translations.

-

Comprehensive Gesture Recognition: Handles a wide range of FSL gestures, including both static alphabet signs and dynamic word signs.

-

Accessible Web Application: Provides an intuitive interface for users to interact with the FSL recognition system.

-

High Performance: Achieves over 90% accuracy in gesture recognition, with fast prediction times across various devices.

This system significantly reduces communication barriers for the deaf community, providing a reliable alternative to human interpreters and fostering inclusivity.

Key Components of Senyas

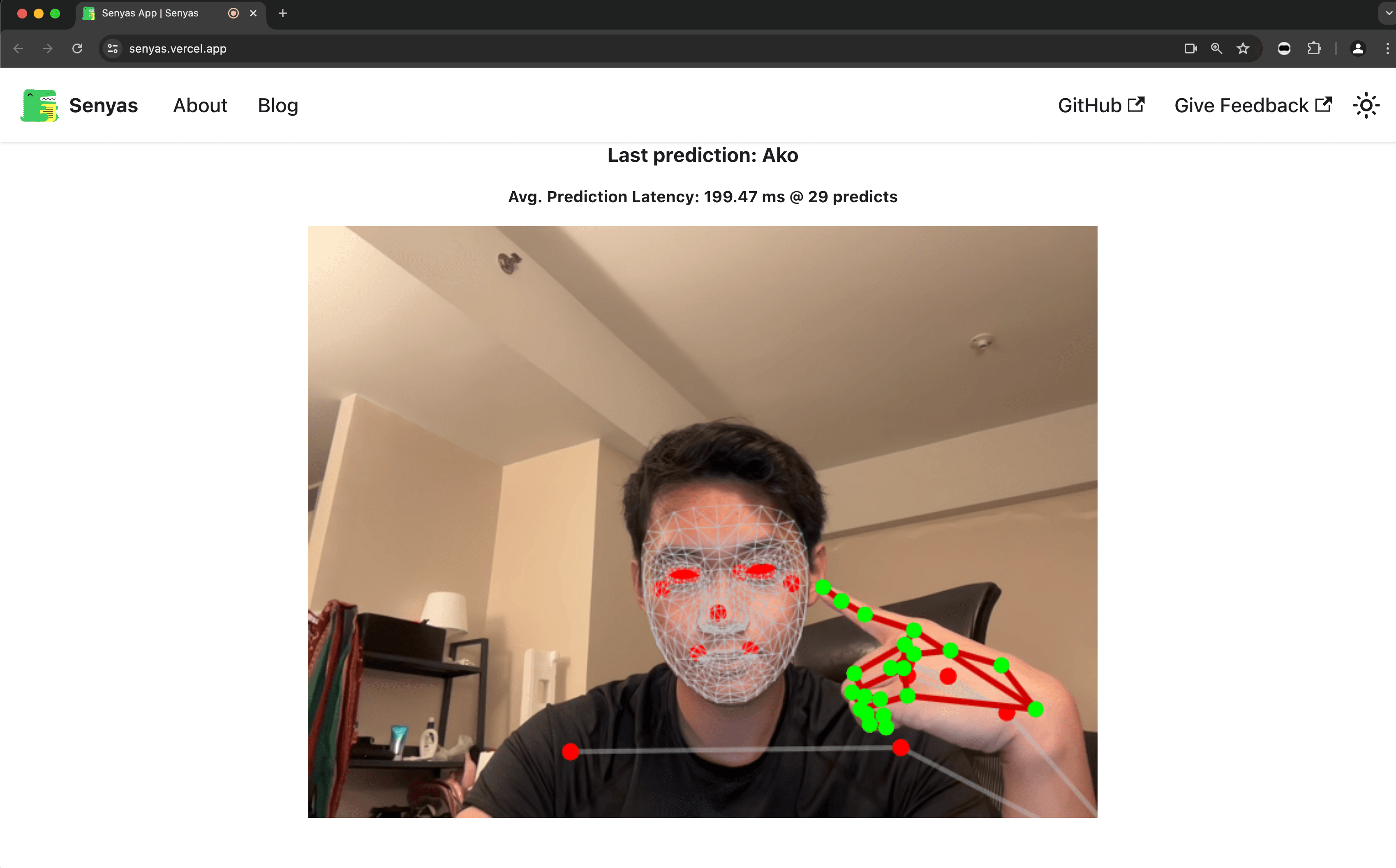

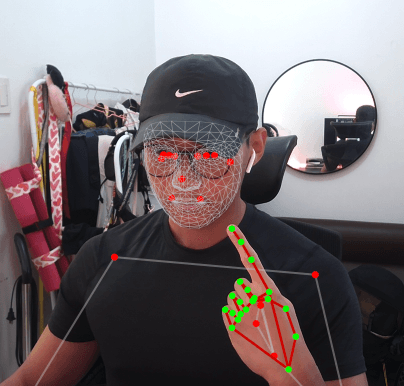

MediaPipe Integration and Prediction

Senyas uses MediaPipe for pose estimation and combines it with the AI model's predictions for accurate FSL recognition.

Web Application Interface

The Senyas web application provides a user-friendly interface for real-time FSL recognition through a device's camera.

Development Journey

Challenges Overcome

-

Limited FSL Dataset: Created a comprehensive FSL dataset from scratch, including both static and dynamic gestures, with data augmentation techniques to enhance model robustness.

-

Real-time Processing Requirements: Optimized the model and implementation to achieve low-latency predictions, enabling real-time FSL translation.

-

Cross-platform Compatibility: Developed a web-based solution using TensorFlow.js and MediaPipe to ensure accessibility across various devices and browsers.

By addressing these challenges, Senyas emerged as a powerful and accessible tool for FSL recognition, positioning itself as a significant advancement in assistive technology for the deaf community.

Our Approach

- Deep Learning (CNN-LSTM)

- Computer Vision (MediaPipe)

- Web Development (TensorFlow.js, React)

- Cloud Integration (IBM Cloud)

Developing Senyas was about more than just creating a technology solution; it was about empowering the deaf community and breaking down communication barriers. We aimed to make sign language recognition accessible to everyone, anytime, anywhere.

Lead Developer of Senyas

- Model Accuracy on Test Data

- 92%

- Real-world Recognition Accuracy

- 86%

- Average Prediction Time

- 377μs

- Web App Performance Score (Desktop)

- 99%